The Tech Lead’s Dilemma

As a tech lead, I’m the first point of contact when a production issue arises. If my team can't replicate it, it falls on me to figure out what’s wrong.

Many developers, when faced with a bug, rush to fix a crash that appears in Crashlytics (the tool used in debugging mobile app crashes), hoping it will resolve the issue. However, my approach is different. My primary focus is always on replicating the issue in a development environment before issuing a fix. Without proper replication, any fix is just guesswork.

Debugging in mobile development presents unique challenges due to the sheer number of devices, OS versions, network conditions, and memory constraints. Is the issue specific to Android or iOS? Does it occur only on low-end devices? Is it a network-related failure? These are just a few of the countless variables I have to consider.

While reading The Sign of the Four by Sir Arthur Conan Doyle a little while back, a quote by Sherlock Holmes has stuck with me:

"When you have eliminated the impossible, whatever remains, however improbable, must be the truth."

This quote perfectly encapsulates my debugging methodology. Let me share a story about a particularly challenging bug that reshaped how I approach debugging.

The Bug That Nearly Broke Me

A few years ago, I had recently been promoted to Tech Lead for an Android app of a major Australian airline carrier. This was my first leadership role in an app with a huge user base. One morning, I received an urgent call from customer support:

"The data is not loading in the app!"

That was all the context I had to go on. No crash logs. No error messages. Just a vague problem that needed immediate attention.

Step 1: Validating the Issue

Since there were no remote crash logs, our monitoring tools had not picked up the issue. The app was handling null data gracefully, making the failure silent. I had to manually check it myself.

- Downloaded the app from the Play Store – Issue replicated.

- Ran the latest version in a dev environment – Everything worked fine.

- Checked the differences between production and dev data – No significant differences.

- Ran the production version locally – Data loaded fine.

At this point, nothing in the code or data seemed to be the culprit.

Step 2: The Breakthrough

Since the codebase looked solid, I focused on what was different between the dev and production builds.

- The only remaining difference was the build type.

- Unlike debug builds, release builds obfuscate code, encrypt assets, and optimize performance.

- I built a release version locally and – boom! The issue was replicated!

Now, I had a lead. However, debugging a release build is not as straightforward because it lacks debugging symbols and logs don't show up.

Step 3: Finding the Root Cause

With limited options, I decided to examine the updated libraries since some dependencies had changed in the last deployment. Normally, I check the release notes for breaking changes, but nothing major was mentioned.

I spent hours manually reviewing each dependency’s ProGuard rules. Finally, I found two missing lines in one of Retrofit's dependencies that were required for release builds.

Adding those two lines solved the issue instantly. Never have two lines of code outside my app caused me so much stress.

That day, I learned a painful but valuable lesson. Logs aren’t always available in production, and relying on them too much makes debugging blind spots even harder.

Lessons Learned: How It Changed My Debugging Approach

The very next day, I implemented changes that have since become my golden rules for debugging and production readiness:

1. Test Release Builds, Not Just Debug Builds

Many teams only test debug builds, assuming production behavior will be the same. After this incident, I ensured:

- All pre-production QA builds use release configurations.

- CI/CD pipelines generate and verify release builds before deployment.

2. Introduce Non-Crash Remote Logging

Many production issues don’t trigger crashes, making them invisible to tools like Crashlytics. To counter this:

- I added strategic remote logging for critical operations.

- This ensures that even if an issue doesn’t crash the app, we can still track abnormal behavior in production.

3. Always Replicate Before Fixing

If a bug isn’t replicated, it isn’t fixed. Any fix without replication is a guess and might not actually solve the root cause.

- Even if I have to simulate network failures, force a scenario, or fake bad data, I insist on replication.

4. Stay Calm Under Pressure

This experience also shaped my mindset towards debugging in high-pressure situations:

- Panic doesn’t solve problems. Methodically narrowing down possibilities does.

- Stressing over a release doesn’t help. Instead, I focus on catching issues early.

Today, I’m known as the most relaxed person during major releases. When people ask why I don’t stress about big deployments, my answer is simple:

"I’ve tested everything to the best of my ability. If something still breaks, I want to find out ASAP so I can fix it quickly."

Conclusion

Debugging mobile applications is a different beast altogether. The sheer number of devices, OS versions, connectivity scenarios, and build configurations make production issues a nightmare to troubleshoot. But experience has taught me a simple truth:

"Good debugging is not about fixing a crash. It’s about understanding the root cause and ensuring it never happens again."

This bug changed my approach forever. And if you’re a mobile developer, I hope these lessons save you from banging your head against the wall when your next big production bug appears.

Omar Mujtaba

Hi there! I’m a mobile developer from Sydney who loves sharing insights on building apps. With experience in mobile and backend development, I break down tech topics to make them easy and interesting.

follow me :

Related Posts

Giving Feedback That Matters: Lessons from a Technical Lead

Apr 01, 2025

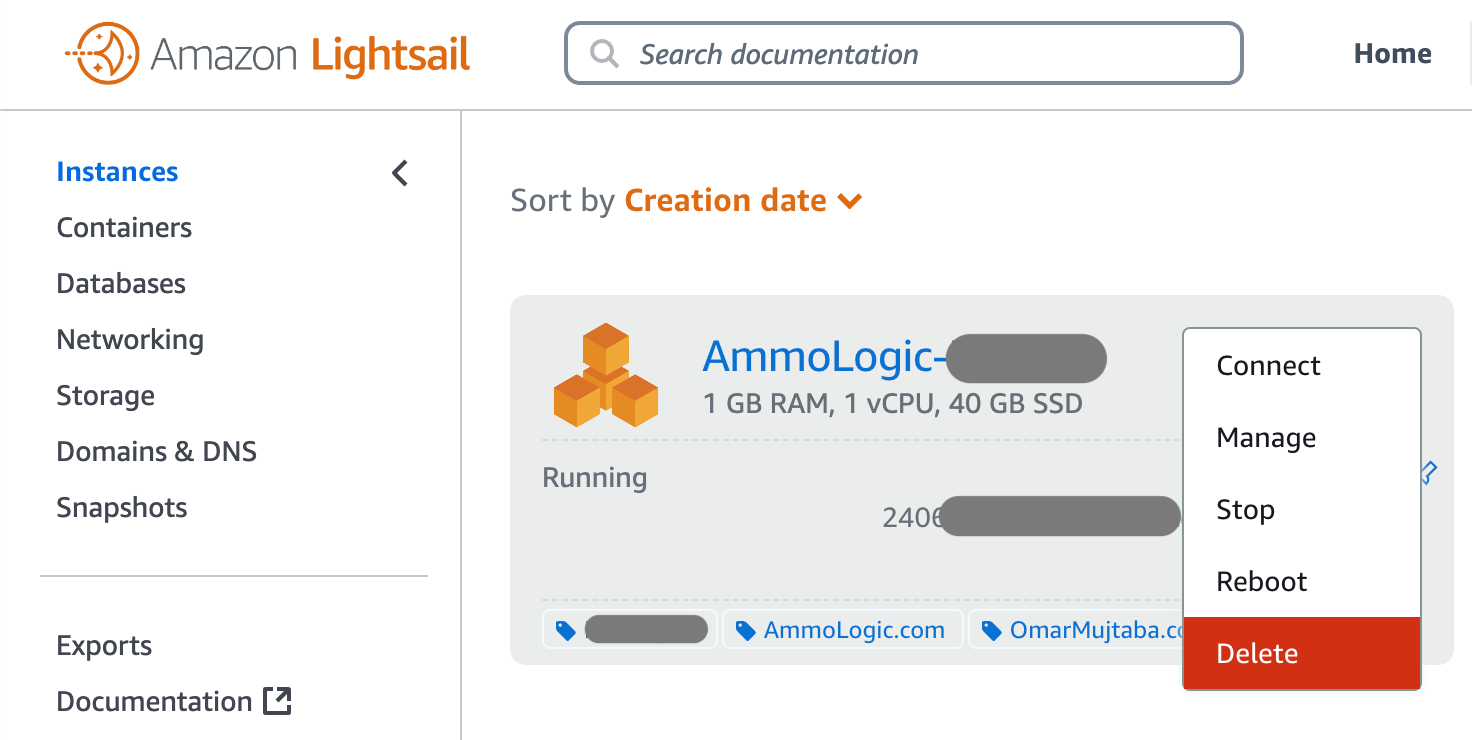

Farewell DIY Server: A Mobile Dev’s Journey Self-Hosting Websites

Nov 10, 2024